In July of 2002, the Director of the Office of Management and Budget announced development of a tool for formally evaluating the effectiveness of federal programs, called the Program Assessment Rating Tool (PART). He described the PART’s purposes as follows:

The program assessment effort presents an opportunity to inform and improve agency GPRA plans and reports, and establish a meaningful systematic link between GPRA and the budget process.

OMB’s guidance contained this description of the PART:

The Program Assessment Rating Tool (PART) is a diagnostic tool used to assess the performance of Federal programs and to drive improvements in program performance. Once completed, PART reviews help inform budget decisions and identify actions to improve results. Agencies are held accountable for implementing PART follow-up actions, also known as improvement plans, for each of their programs. The PART is designed to provide a consistent approach to assessing and rating programs across the Federal government. PART assessments review overall program effectiveness, from how well a program is designed to how well it is implemented and what results it achieves.

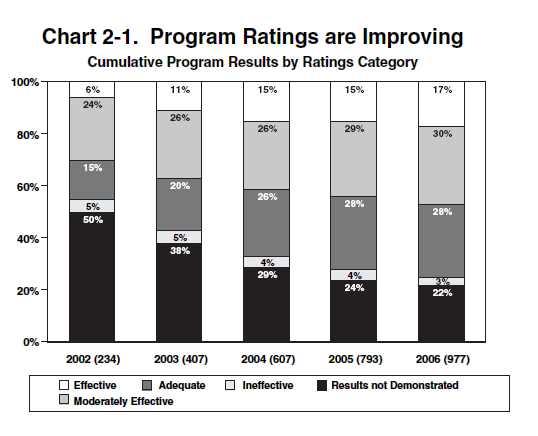

The PART questionnaire was divided into four sections: 1. Program Purpose & Design, 2. Strategic Planning, 3. Program Management, and 4. Program Results. Points were awarded to a program based on the answer to each question, and an overall rating of effectiveness was then assigned. There are 5 categories of possible ratings: Effective, Moderately Effective, Adequate, Ineffective, and Results Not Demonstrated.

OMB subjected all federal programs to PART evaluations. Its effort began with assessments and ratings of 234 programs covering approximately 20% of the federal budget, followed by publication of the results in the President’s FY 2004 Budget. OMB then expanded PART evaluations to an additional 20% of federal programs (or budget coverage) each year, along with selected re-evaluations of previously “PARTed” programs when there is reason to believe the rating might be changed. The last year of OMB's use of the PART was 2008, as the tool was then dropped by the Obama Administration and replaced with a new emphasis on using performance information to manage programs, to address a shortcoming that had been a concern of OMB and others for several years. This new focus on the effective use of performance information in program management was underscored in the enactment of legislation to update the Government Performance and Results Act of 1993, the GPRA Modernization Act of 2010.

The Program Assessment Rating Tool contained 25 questions in the basic PART instrument, which covers the category of Direct Federal Programs. These questions were organized into four sections of the PART that were each assigned a weight for calculating an overall score:

| I. | Program Purpose & Design | weight: 20% |

| II. | Strategic Planning | weight: 10% |

| III. | Program Management | weight: 20% |

| IV. | Program Results/Accountability | weight: 50% |

In addition to the 25 questions on the basic PART instrument, certain types of programs had several additional questions relating to their special characteristics. There were 6 such categories of programs that had their own unique set of additional questions. The 7 categories of federal programs were:

|

Each question in the first three sections of the PART was answered in a Yes/No format. Questions in section 4 (Program Results/Accountability) could be answered as Yes, Large Extent, Small Extent or No. When a PART was completed for a program, along with each answer there was a brief explanation that included a description of the relevant evidence substantiating the answer. The questions within each section were given equal weight, unless the evaluator decided to alter their weight to emphasize certain key factors of importance to the program.

The PART’s instructions made clear that a high standard had been set for compliance with what the question sought and that the burden of proof was on the program to show that it had fully met the evidentiary requirements in order to be award a Yes.

A Yes answer must be definite and reflect a high standard of performance. . . . Each question requires a clear explanation of the answer and citations of relevant supporting evidence, such as agency performance information, independent evaluations, and financial information. Responses must be evidence-based and not rely on impressions or generalities.

OMB converted the PART scores into qualitative ratings using the following scoring bands:

| Rating |

Range

|

|---|---|

| Effective |

85 – 100

|

| Moderately Effective |

70 – 84

|

| Adequate |

50 – 69

|

| Ineffective |

0 - 49

|

However, regardless of the overall score, a rating of Results Not Demonstrated (RND) was given if the program did not have performance measures that had been agreed-upon by OMB, or if the measures lacked baselines and performance data.

The following chart published by OMB showed a steady improvement in PART ratings achieved by federal programs over time: